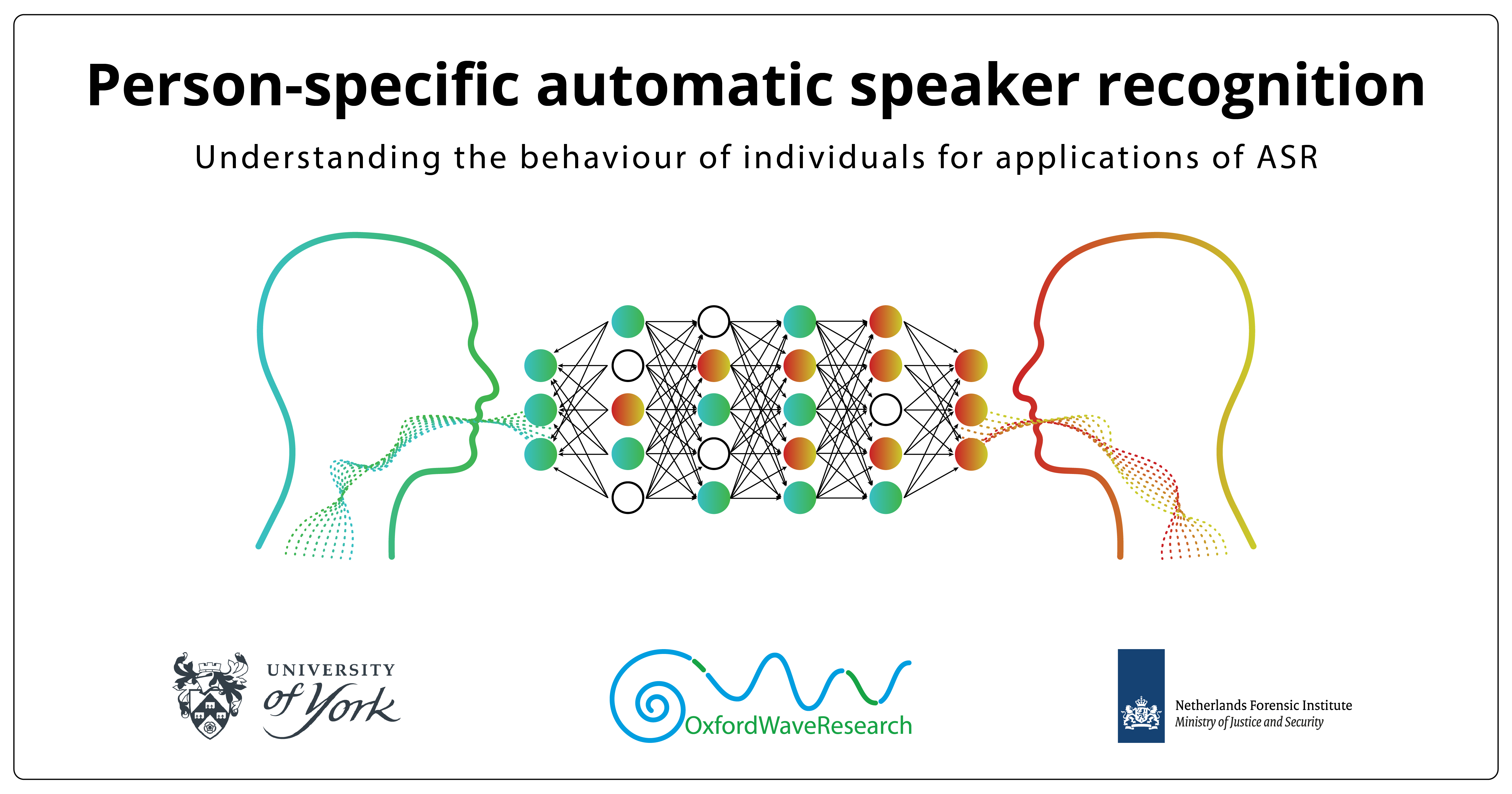

Oxford Wave Research are delighted to be collaborators with the University of York and the Netherlands Forensic Institute in a recently awarded ESRC-funded project (£1,012,570) ‘Person-specific automatic speaker recognition: understanding the behaviour of individuals for applications of ASR’ (ES/W001241/1). This is a three year project running from 2022 to 2025 led by Dr Vincent Hughes (PI), Professor Paul Foulkes (CI) and Dr Philip Harrison in the Department of Language and Linguistic Science at the University of York. The project is due to start in summer 2022 and will run for 3 years. OWR will be providing our expertise and consultancy in automatic speaker recognition and our flagship VOCALISE forensic speaker recognition system.

Automatic speaker recognition (ASR) software processes and analyses speech to inform decisions about whether two voices belong to the same or different individuals. Such technology is becoming an increasingly important part of our lives; used as a security measure when gaining access to personal accounts (e.g. banks), or as a means of tailoring content to a specific person on smart devices. Around the world, such systems are commonly used for investigative and forensic purposes, to analyse recordings of criminal voices where identity is unknown.

The aim of this project is to systematically analyse the factors that make individuals easy or difficult to recognise within automatic speaker recognition systems. By understanding these factors, we can better predict which speakers are likely to be problematic, tailor systems to those individuals, and ultimately improve overall accuracy and performance. The project will use innovative methods and large-scale data, uniting expertise from linguistics, speech technology, and forensic speech analysis, from the academic, professional, and commercial sectors. This has been made possible via the University of York’s strong collaboration with Oxford Wave Research and two project partners including the Netherlands Forensic Institute (NFI).

The University of York and OWR teams are looking forward to a very fruitful collaboration that will undoubtedly further the state of the art in forensic speaker recognition.

Dr Vincent Hughes, Principal Investigator, University of York says “We are delighted to be working so closely on this project with Oxford Wave Research, who are world leaders in the field of automatic speaker recognition and speech technology. We hope that our research will deliver major benefits to the fields of speaker recognition and forensic speech science”.

Dr Anil Alexander, CEO, Oxford Wave Research says “Our team led by Dr Finnian Kelly is thrilled to contribute to this in-depth study of the individual specific-factors affecting speaker recognition, with the accomplished research team led by Dr Hughes from the University of York who are at the forefront of this space, and real-word end-users like the Netherlands Forensic Institute who have been driving research and innovation in this space for many years”.